”The work arises out of and through the activity of the artist. But through and from what is the artist that which he is? Through the work; for the German proverb ‘the work praises the master’ means that the work first lets the artist emerge as a master of art. The artist is the origin of the work. The work is the origin of the artist. Neither is without the other. Nonetheless neither is the sole support of the other. Artist and work are each, in themselves and in their reciprocal relation, on account of a third thing, which is prior to both; on account, that is, of that from which both artist and artwork take their names, on account of art.”

“Das Werk entspringt aus der und durch die Tätigkeit des Künstlers. Wodurch aber und woher ist der Künstler das', was er ist? Durch das Werk; denn, daß ein Werk den Meister lobe, heißt: das Werk erst läßt den Künstler als einen Meister der Kunst hervorgehen. Der Künstler ist der Ursprung des Werkes. Das Werk ist der Ursprung des Künstlers. Keines ist ohne das andere. Gleichwohl trägt auch keines der beiden allein das andere. Künstler und Werk sind je in sich und in ihrem Wechselbezug durch ein Drittes, welches das erste ist, durch jenes nämlich, von woher Künstler und Kunstwerk ihren Namen haben, durch die Kunst.”

Martin Heidegger, 1950

info The project is implementing a large scale infrastructure to train and build Instagram Recommendation models with future rewards info. That is engagement events collected on media that was viewed by the user after the to be ranked item. The concept has been adopted to all major Instagram Product surfaces including Reels Tab, Feed and Discovery and is a key milestone for reinforcement learning at Instagram.

meta As part of IGML Modeling Technologies team, infrastructure has been created to track short term and long term engagement events after the to be ranked item. The engagements are used to build labels that critically improve Media recommendations on various surfaces on the Instagram App and meaningfully increase Daily Active Users and Watch Time in the App.

process In close collaboration with product teams from Discovery, I provided labels to improve Reels recommendations in the Discovery view. After this first launch, I streamlined the onboarding process, spawned projects to extend the timeline for future rewards and adopted future rewards to 8 ranking models for Instagram in 2023.

info This is a workshop on effective collaboration for engineers. It has been held for engineering teams at Meta AI and Instagram Ranking Infra. The workshop applies a 7-steps process, the get stuff done wheel, on an engineering team. It identifies tools, techniques, behaviors to help software engineers at Meta to effectively complete each step of collaboration in their projects. As a result 19 out of 20 engineers found this workshop helpful for their next half planning and 20 out of 20 engineers learned some or a lot of new techniques for collaboration.

meta I designed this workshop based on my experience as a Software Engineer at Meta. I applied the 7-step get stuff done wheel from Radical Candor by Tim Scott (Chapter 4). This concept was originally developed for managers and the workshop applies the concept specifically to software engineers. It focuses on tools and functions available at Meta for software engineers such as Notification Bots, Code Review Tools and many more to complete every step of a project effectively. After the workshop a “Collaboration Cheat Sheet“ is handed out to all participants as reference in upcoming projects.

process Produced the “Collaboration Cheat Sheet“ with the help of the Meta Open Arts Lab New York. Feedback from participants was collected through surveys with Google Forms. The art work is inspired by works from Taeyoon Choi.

info As part of Meta AI I have shipped critical new infrastructure to run AI inferences on images and videos for over 100 use cases within Meta including Instagram, Whatsapp, Facebook, Messenger, Reels and Oculus.

meta As a founding member of Meta’s Media Understanding Infrastructure team I helped create a large infrastructure processing 100s kQPS of images and videos. I helped grow the team from 5 engineers to 15 engineers. The collaboration included infrastructure engineers, AI researchers, front-end engineers, data-scientists, designers, program managers, privacy officers.

process First implemented a C++ execution framework to run Media Inference as a multi-model graph, that is output of one AI model can be configured as input to another AI model. To proof the value of the system I onboarded first high profile use cases, including detecting election interference models for the US election 2020, a company wide top priority at that time. As a next step, I identified Infrastructure Usability as a key scaling limitation. I created a dev efficiency workstream for 5 engineers, identified key pain points for use case onboardings and spawned projects including model management, traffic gateway and signal configuration. Results of the workstream were tracked every 6 month with Partner Surveys and quantile statistics on use case onboarding steps. My work increased the overall MOS score rating by partners on 5 consecutive halfs. I onboarded critical use cases including copyright detection for all media uploaded to Meta products, detection of dangerous content for FB messenger kids or media embeddings for Reels Ranking.

info An implementation (github) to Multi-Agent Reinforcement Learning with Deep Sarsa Agents using tensorflow-js. n agents train each a sarsa policy network on gridworld, a game similar to the gym environment in gym-gridworld.

intuition Distributing exploratory moves over n agents speeds up game exploration and overall convergence.

algorithm The algorithm presented in this codebase obtains an action by predictions from n simultaneously trained sarsa policy networks. On startup a master process spawns n worker processes, for each agent one. Workers communicate with each other via IPC messages using node cluster. To obtain an action, agent A broadcasts a prediction request to n-1 agents and generates one prediction from its own sarsa network model. Each worker is running the same agent, trains on the same game and sends/responds to prediction requests. When all agents responded with their prediction, the action with the largest value (Q_value) from all n predictions is obtained. During network training a factor epsilon between [epsilon_min,1] determines the fraction of times the policy action is picked versus a random action is obtained to transfer to the next state, i.e. the implementation is epsilon-greedy. Epsilon decays with increasing learning episodes. Each policy network has 4 hidden layers activated with ReLU.

result Multiple collaborating agents require less episodes to solve gridworld. I think of it like a team of people that initially explore a maze individually by picking random routes. As they build up knowledge they take turns on intersections based on their collectively learned experience and get to the end quicker than they would all by themselves.

I have been passionate about gathering and analyzing data of voting behaviour in politics, specifically of the European Parliament. With the break through of neural networks as a supervised learning technique to train AI models this dataset becomes very powerful. The publicly accessible results shall serve as an alternative and support alternatives to corporate news on politics.

This codebase builds Neural Network Models for Parliamentarians of the European Union (EU) predicting their votes on future legislation. The model calculates a parliamentarians vote on a certain legislation using the Position of relevant Organizations and Lobbyists as input. The trained learning models achieve in average an accuracy of 92.5% over current Parliamentarians of the EU.

Neural networks are a machine learning technique for supervised learning. A network is trained with labeled samples. Each sample trains the neurons in the network to produce an output as close as possible to its label. It does so by minimizing the cost, the difference between the label value and the calculated value. Each training iteration reduces this cost by updating the network parameters. The network is trained until new training iterations do not decrease the cost much further, i.e. the cost is minimal. This project uses multiclass classification, one-vs-all, with K=3 classes: for, against, abstain.

Training and testing the networks is based on votes of over 3600 present and past EU Parliamentarians, 120 Lobbyists and Organizations and their position on ~90 bills and legislative proposals between 2013 and 2018. Each Neural Network is trained for a specific EU Parliamentarian (MEP) using its votes as labels on samples. Each sample represents the Positions of Organization on Bills.

The next Elections to the European Parliament are expected to be held in 23–26 May 2019.

Prediction accuracy

The accuracy for Parliamentarians with 33 votes in the dataset is 94.3%, 32 votes or more is 92.5% for the given testset (30% of the dataset).

Network architecture

one-vs-all classification for Parliamentarian P.

labels = Parliamentarian vote (For/Against/Abstain) on a EU bill

features = Positions of Organizations and Lobbyists towards bills

number of classes K=3 (for/against/abstain)

number of features nx: organizations holding votes on bills with P

13 hidden layers of size nx activated by ReLU function.

Ouput layer activiated by Sigmoid function.

Between 20 to 33 training samples per Parliamentarian P.

Training set y_train holds 70% of labeled samples. Testing set holds 30% of labeled samples.

Hyper parameter optimization

The best predictions are achieved by a network with 13 hidden layers predicting votes with an accuracy of 92.5%. The number of features nx is between 40 and 100 for each Parliamentarian. Each hidden layer is of size nx. We use the learning rate alpha=0.03 because it is the smallest learning rate that achieves close to minimal cost, where the cost curve flattens out, under 3000 learning iterations.

Credits

This project uses utility methods from Neural Networks and Deep Learning taught by Andrew Ng. The dataset has been gathered by the team of politix.io which extracted Parliamentarian votes from parltrack.eu. 🙏

Prelude

In 2013 my friends and I co-founded politix.io. The project aims to give a new angle on how we look at a governments work. We believe correlating Organizations, Parliamentarians and Citizens through their vote on legislation allows to understand sentiment clusters beyond official coalition. The Position of Organizations on legislation guides Parlamentarians in their decisions. While this has been a common instinct, politix.io researches those correlations. The project was funded by Advocate Europe in 2016 🙏, won the European Citizenship Award 2016 and has been presented at RE:PUBLICA 2017 in Berlin ✨.

Checkout the source code on github.

info Art and tech studies at the School for Poetic Computation (SFPC) in New York City because technology contains great potential for creative expression.

meta As part of a 10 week intensive program at SFPC NYC I had the opportunity to study with 18 art and tech enthusiasts from all over the world of all ages and backgrounds. Under the supervision of Zach Lieberman, Taeyoon Choi, Morehshin Allahyari, Kelli Anderson and numerous outstanding TA’s we delved deep into posthumanism, building 1 bit computers, visual art with open frameworks and origamis. The curriculum was organized by four tracks: hardware, critical theory, software, crafts.

process I developed the works posted here throughout my studies at SFPC. Get more impressions from the program on my instagram and see my art page.

info This project allows to set WebRTC screensharing constraints in Mozilla Firefox according to the w3c standard. A brief deep dive into firefox's media engine.

meta WebRTC has become a strong standard in the video conferencing and telecommunications industry with the web support on Firefox, Chrome and Safari (since 2017) and mobile support on android and iOs (see Amazon Echo View). Oddly enough Mozilla Firefox had no support to constrain WebRTC screensharing sessions. The resolution would default to the one of the capturing source (screen / window / tab) and the frame rate was hard coded to 3 fps. The constraints object to acquire local media has simply not been parsed.

process With a mentor from Mozilla Firefox, Jan-Ivar Bruaroey, I took on this fairly old issue. The steps: Fetch project from mercurial repository -> Setup a firefox dev environment -> Build and install the project -> Reverse engineer the Firefox Media Engine (specifically desktop capture) -> Incorporate parsed media constraints -> Upload patch -> Released in Firefox 38 -> Blog Post

Fixed bugs 1121047 and 1211658. As a result the screen sharing constraints are now supported in all major browsers.

info This project (github) explores the react-native Animated and PanResponder libraries to build a tinder-like swipe experience for android and iOs. The application pulls images from the Imgur Gallery Api and renders the content using react-native on mobile devices. An user can vote for content by swiping left or right. Application state is implemented with a baobab tree structure.

meta How native are user experiences built with react-native? Promising a native experience for android and iOs with a web development stack based on react.js, react-native has the potential to cut development time significantly. An exciting simplification in my engineering stack that I really wanted to give a try. This project focuses on a tinder-like swipe animation as a measure of the experience react-native can gain. No backend is implemented for the sake of this experiment. The result is very smooth and I personally could not tell the app from an actual native implementation. Reach out and I can send a link to install the app. The use of react baobab over react redux as StateTree simplified the code base even more.

process Leveraging nets and bluebird promises, images are pulled by an Imgur Adapter from the Imgur Gallery API and forwarded via ActionCreators to a baobab based StateTree. The images are then rendered in a set of cards through the Cards Component which is listening on updates to the state tree and binding the react-native libraries Animated and PanResponder for the actual animation.

Please fork the project on github and reach out to get an invite to install the app on your phone. The project got implemented over New Year 2016/2017.

info The device wall assembles smart phones and tablets mounted on a wall to an uniform canvas. Content is coordinated between various viewports and each device renders the excerpt it accounts for based on its position relative to other devices like a tile in a mosaic.

meta This project breaks up the concept of insulated, self contained viewports. It coordinates android based devices (phones and tablets) to a collective screen and explores new interaction models with mobile devices. One being tile based interactions like a memory match game.

process A socket.io server (inherited from a previous project) connects devices in a star topology and static content is served for all devices over http(s). Registration and calibration are organized device by device on the actual mounted wall. Wifi as network link has shown to be weak and the USB based adb link is used instead. Content rendering is based on HTML5 and CSS leveraging flexbox. CSS transitions are forced to GPU rendering using transform: translateZ(0).

The project got exhibited at the Byron Nelson Golf Tournament and Pebble Beach Golf Tournament.

info An addition to politix.io focusing on engaging users. New users are presented with a questionnaire asking for the vote on key legislation of the European Parliament. Based on this seed data the politix.io social graph is queried and calculates the 3 Representatives and 3 Lobbyists that have the strongest correlation with this user's sentiment.

meta As part of the Debug Politics Hackathon 2016 in San Francisco I focused on the problem of engaging users at https://politix.io. While the platform has a rich data set and deep links between Citizens, Representatives, Lobbyists and Bills (legislation), usage analytics have shown that most users drop off quickly from the experience.

process In a team of three we conceptualized, implemented, deployed and pitched a solution for the engagement problem in a single weekend at the Debug Politics Hackathon.

Check out the pitching session at minute 41:30.

info Politix.io implements a data driven approach to political journalism and news. Founded in 2014, the platform provides citizens an unique insight into the political scene of their parliaments which is representing them and making decision on their behalf.

meta This project creates a social graph of Citizens, Representatives, Lobbyists and Bills (legislation). The bills as they stand for the materialized outcome of a parliaments work are used to correlate the sentiment between Citizens, Representatives and Lobbyists. As a result a new insight into political decision making is given, empowering all stake holders and most significantly citizens a) to understand the political context behind issues that are decided on and b) to raise their voice and close the feedback loop between citizens and representatives.

process I founded the project with two political scientists based out of Brussels, Belgium, the heart of the European Union. The project has been driven by the clear view on how to make a parliament's work transparent. We believe building a social graph of all market forces and visualizing the connections will eventually break up traditional we vs them concepts and dynamics politics and allow for a more result oriented instead of folklore oriented collaboration. We implemented the project for the European Parliament (https://eu.politix.io) first and got funded by Advocate Europe in 2015. Since then we have grown the project as a non for profit organization and presented at the re:publica 2017 in Berlin.

info The 2014 European Parliament Election Results visualized in d3.js. This project pulls election results from the public results xml and visualizes a) the parliament composition with deep links to parliamentarians b) the election results per member state c) the association between Country Party and EU Parliament Group.

meta Spring 2014, the EU Elections are coming up and D3.js just got released. A great opportunity to experiment with new visualizations for election results. Published on the election night I was excited to see significant traffic on our servers. Checkout the project on elections.politix.io.

process Ruby on Rails Server -> HTTP GET public results every 20 minutes -> Parse XML and persist in Redis DB -> Start Browser and load d3.js app -> HTTP GET results data -> Bind data points to SVG elements for Rendering -> Add transitions

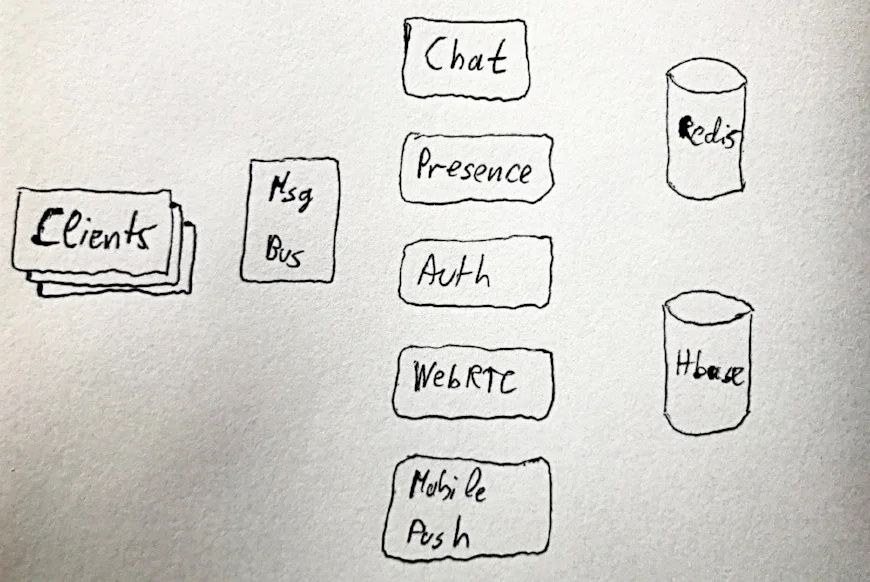

info A real-time cloud to connect web based clients, i.e. web, desktop, mobile. The system targets the collaboration space where key features are messaging, presence and video conferencing.

meta The design principles can be summarized as Low Latency Message Delivery, High Service Availability, System Scalability and Low Operational Complexity. The term ‘real-time’ refers here to the cognitive real-time of humans (compare Jacob Nielsen) which is considered within 250 ms for the end-to-end experience of message delivery. Users are to be connected via mobile, web and desktop applications in a real-time manner.

Low Latency Message Delivery

Non-blocking execution threads only and no in-band IO in the messaging path. While there is no guarantee for packet latencies, minimize the number of network hops and processing times in each network hop to its bare minimum. The implementation uses exclusively asynchronous call back patterns for I/O and is strictly event driven, no polling.

High Service Availability

Handle service failures by rerouting the service load to another already running service instance. Handle any reason why a service could become unreachable such as hardware failures, process crashes or miss configuration. An active-active failover scenario.

System Scalability

Separating the system into distributed micro services where each service is dedicated to one main functionality. Those are: Messaging/Chat, Presence, Search, Media Upload, Mobile Push Notifications, Authentication and WebRTC signaling. Services are orchestrated by registering the services to a discovery service that maintains and publishes a service list and balances service load.

Low Operational Complexity

Collaboration between clients is scoped within each customer domain, a tenant. Allowing one single deployment of the system to service multiple tenants generates low operational complexity. Service affinity for each client is calculated dynamically in the msg bus.

Stack and Protocols

Services and Message Bus are implemented in Node.js using Bluebird Promises for asynchronous callbacks. While run time exceptions are expected to be caught in the Promise chain, unhandled runtime exceptions are handled by a service runner. Messages and Interactions are persisted in sorted key value data base (Hbase) serialized using facebook thrift using the timestamp as primary row key. Socket.io addresses, roster search index and presence information is persisted in memory with redis (shared and recovered using .aof files). Each service is equipped with health endpoints (express.js).

Message Bus

A data pump forwarding socket.io messages. Clients and Services connect to message bus which distributes messages. It also calculates Service Affinity (multi tenancy), Service Discovery and validates Tokens. To increase performance the message bus forks child processes.

Service Design

A Client connects to Message Bus using socket.io.

A Service handles socket connect to establish trust with message bus using the challenge answer protocol. Ones granted, it registers the service with the Message Bus. Ones registered the Service receives a service list from the message bus which acts also as service discovery. On message receive the Service verifies the Message and validates the auth token sent along with the message. Services: Chat, Presence, Directory (and Authentication), Mobile Push, WebRTC.

Bootstrap Sequence

Message Bus starts up

Services register with Message Bus via Challenge Answer Protocol. Message bus publishes service list to all registered services

Client authenticates with Directory Service and receives a token and list of service addresses as result

Client populates User Interface. Loads people roster, loads conversations and recent messages

Client reaches services (chat, presence) by sending messages to the message bus

Message bus forwards messages to services

critical reflection Applying the CAP theorem the system was strongly consistent but would not achieve full availability. The team prioritized optimizing the uptime of individual services over handling all component failures first. Some parts of the system could be replaced by serverless components.

process In a team of 4 engineers we created this system over the course of one year using scrum/agile software development methodology. Architectural clarity and code hygiene made this project a very joyful and rewarding journey. A modification of this system got published as the messaging app Jive Chime.

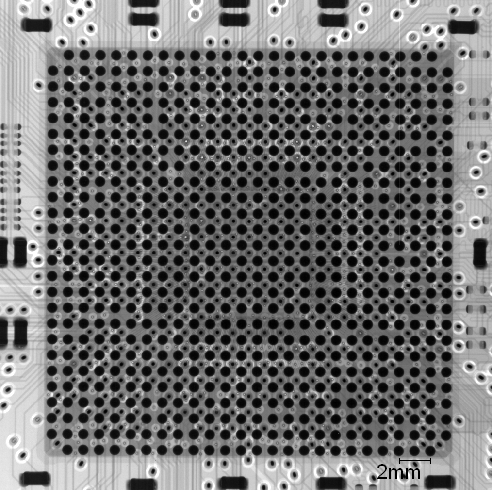

info A Single Board Computer in the form factor of a credit card. Based on an iMX25 ARM processor this project populates a 182 pin SO-DIMM circuit board with all components required for a full system (CPU, Clock management, Power supply, Flash Memory, RAM). The board routes all key peripherals to an SODIMM socket for further integration (SPI, I2C, UART, DDR, Ethernet, USB, GPIO, PWM, CAN).

meta Being part of an early bird pool I had the opportunity to develop a system around the, about to be released, iMX25 processor. A very exciting opportunity that required some reverse engineering as the documentation was not yet complete.

process Working in a team of 2 engineers south of Munich, we designed the digital and analog circuit, chose the components and partnered with a third party for the layout. We ported the open source U-BOOT bootloader to the iMX25 using an in circuit debugger. Have a look at the product spec of the product release.

“People who are really serious about software should make their own hardware” A. Key

info An interface card translating high speed analog signals up to 10 MHz into machine readable format for computer based signal processing. An 8 layer Printed Circuit Board with 1000+ components integrating 4 analog digital converters (each 14 bit) including Altera Cyclon 2 FPGA and ARM microprocessor. The card is used to measure the precision of high frequency & high precision sensoric signals.

meta 4 differential analog signals run through a second order active low pass filter. At 64 Mega Samples per second and a resolution of 14 bit the analog signal gets converted into discrete digital values by an Analog Digital Converter. The FPGA works similar to an oscilloscope using an external SSRAM as ring buffer. The memory is transmitted via USB for further analysis in Matlab.

process Analog Circuit Design with PSPICE -> Design Capture for Routing -> Frequency Simulations for Choosing Termination Resistors -> PCB production and part mounting -> 3D Scan and XRAY of manufactured Hardware -> VHDL configuration -> Power ON -> 10 MHz Analog Signal On -> Connect via USB to PC -> Open Matlab -> Run FFT

6 Month partnership with a sensorics company south of Munich

info

Excerpts from my personal notes. Every note is on a different matter and most of them have been drafted originally in German. Every note is first listed in its original language and then translated to English or German.

meta

Through the Critical Theory class on technology taught by Morehshin Allahyari at the School for Poetic Computation in NYC which I attended at the spring term 2018 I discovered my passion to write.

process

I write down thoughts in a daily manner using apple notes which I have synced on all my devices. Most of them are short phrases and paragraphs but some expand over time. Selected excerpts of my personal notes I make available through my github repository on writings.